Content

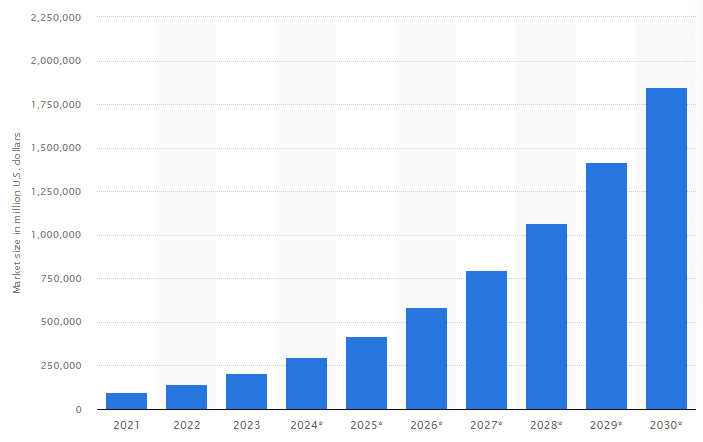

The dream of ubiquitous AI is rapidly becoming a reality. Statista analysts’ forecasts show that the global AI market is estimated to reach $1.847 trillion by 2030. This indicates a high interest in the technology among representatives of various business categories.

Artificial intelligence (AI) market size worldwide in 2021 with a forecast until 2030(in million U.S. dollars). Source: Statista 2024

The high level of investment in this segment indicates its promising nature and potential. However, the practical application of AI, as well as its training, even in 2024, is accompanied by a number of risks. Key issues are related to data, their validity, relevance, and biases. The success of AI development and task execution depends on the quality of information sources, learning algorithms, and accuracy control.

Today, you will learn more about the challenges of AI ML, the phenomenon of bias, and the methodology of optimizing tools based on technology.

Methodologies of Machine Learning in AI

From the results of research on AI by SalesForce analysts, we find that approximately 86% of IT leaders consider technology a catalyst for active industry transformation. This means that experts in the segment are constantly working on improving AI and ML algorithms, focusing on the needs of businesses or companies for which innovative tools are developed.

Three common methods are used to achieve this. Each is fundamentally different from the others. Each focuses on achieving a specific goal, such as studying a specialized topic. For different use cases, one learning model may be more advantageous in terms of ROI. Therefore, let’s take a closer look at each of them in the context of their profile advantages.

Unsupervised Learning

This method involves actively collecting data from all available sources, independently classifying them, analyzing, and processing them with AI algorithms. The scenarios and criteria for learning can be completely different, but the process is entirely uncontrolled.

The obtained AI model can be used to generate content, partially model situations, forecast, etc. However, this learning methodology is most susceptible to phenomena such as bias and errors.

Due to the non-intervention policy, AI may perceive information incorrectly, forming a somewhat distorted “consciousness.” This does not mean that the initial data will be 100% erroneous, but a person should still fact-check.

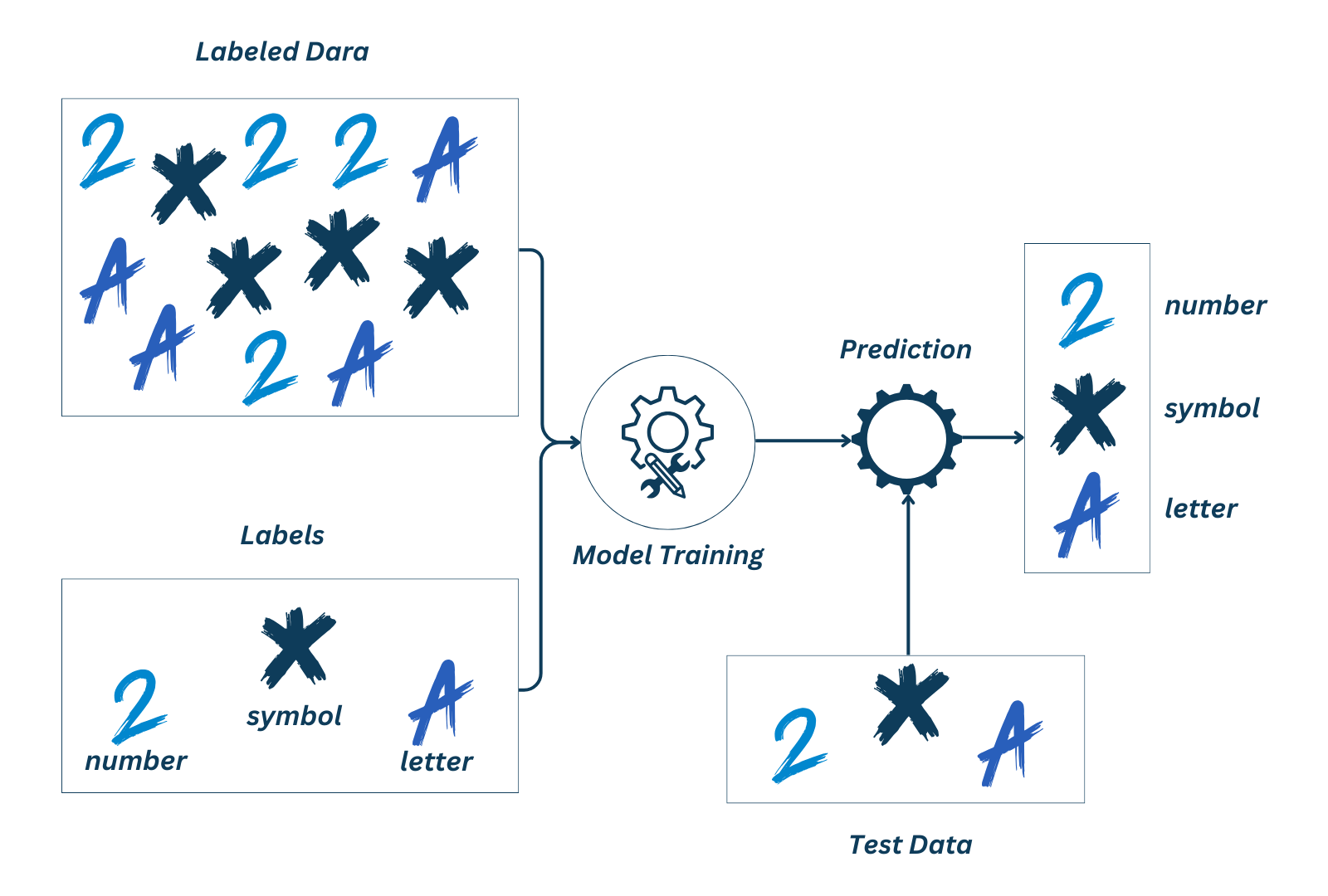

Supervised Learning

The most restricted but accurate learning model. It applies clearly defined sources of information, structured data processing algorithms, and constant control over the absorption and processing of digital content by the bot.

The supervised learning model allows for performing important operations with almost 100% accuracy and positive effects, such as simulating business situations, forecasting development, and generating ideas and concepts. However, a bot based on this model may not offer bright innovations as it “thinks” within set boundaries.

Therefore, this type of learning is not the most efficient but is the most understandable and convenient when it is necessary to minimize risks and create a balanced, understandable decision-making algorithm.

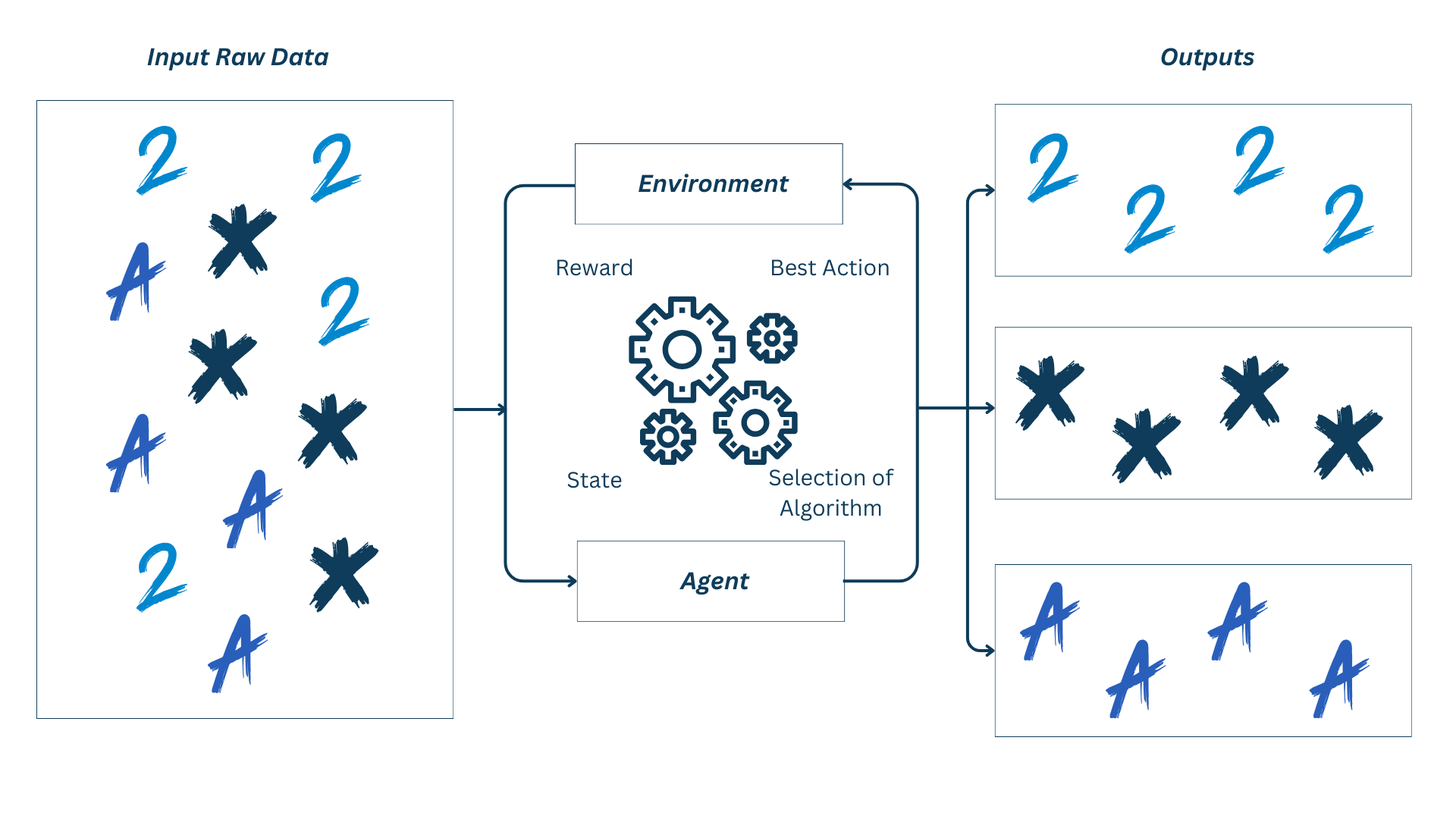

Reinforcement Learning

This ML model can be called hybrid, as it combines the strengths of previous methodologies. AI absorbs information from all available sources, but its output data undergoes specialized censorship.

Thus, the model receives “intelligence” based on the moral and ethical aspects of human consciousness, learning to verify information through external sources. This type of learning is the most challenging, requiring active monitoring by technical experts. It is also the most effective, as its accuracy and correctness (accuracy of information delivery) are the highest among all types of learning.

Algorithms of this kind can generate quality results, so the list of application cases is formally unlimited. The reinforcement learning model allows AI to create potentially winning concepts, strategies, recommendations, etc.

All mentioned models are effective in certain segments. However, only if algorithms are developed by experts who understand all the risks of ML and AI and know how to mitigate them.

Risks Arising During the Learning Process

According to Statista analysts, among the most common risks of applying generative AI for business purposes, the following are highlighted:

- Inaccuracy is relevant for 56% of users.

- Cybersecurity concerns trouble about 53% of respondents.

- Intellectual property violations are relevant for 46% of those surveyed.

- Legal issues are interesting to 45% of users.

- Data ambiguity is relevant for 39% of experts.

- Confidentiality is crucial for 39% of respondents.

It is worth noting that experts actively explore possibilities to mitigate or compensate for the mentioned risks. For example, about 38% of respondents have already found ways to partially or fully address cybersecurity issues in AI and ML algorithms.

Despite this, there is a list of risks that are the most challenging in terms of resource significance, as the ROI of the learning processes does not always correspond to investments. Let’s consider a few examples.

Inaccuracy of Input Data

Training bots using massive amounts of information is potentially risky, especially if the sources are not manually verified. AI can acquire irrelevant or even erroneous data, which it will then use to formulate responses to specific queries. This creates several risky situations, such as:

- The AI core becomes saturated with low-quality information.

- Algorithms generate output data based on false input materials.

- Using such solutions potentially leads to losses for AI users (businesses).

Therefore, it is essential to manually verify the data you plan to use for AI training.

Misinterpretation of Information

The consciousness and cognitive qualities of AI are highly subjective concepts, so expecting a bot to correctly interpret information after unsupervised learning is unrealistic. Data processing primarily depends on algorithms that should be actively modernized. Evaluation systems should also be in place to point out potential errors and cultivate an adequate data perception in AI.

Accentuation Bias

Secondary content can become a key source of information during AI training if its data perception model is not actively constructed. In such a case, you’ll get a dysfunctional system that appeals to minor theses or sources. Ultimately, this will lead to problems affecting the effectiveness and applicability of the trained bot in practice.

Algorithmic Errors

We are responsible for what we create. AI learning is no exception, as engineers define algorithms for information absorption, processing, and interpretation. This process combines operations from development, mathematical programming, and NLP components, making it more than possible to introduce one or more errors. Potentially, this could lead to various consequences:

- Incorrect prioritization of learning.

- Issues with information analysis.

- Erroneous output results/answers to queries.

Clearly, the obtained data cannot form the basis for making important decisions. Therefore, active work on modifying AI learning algorithms using the best global practices is crucial.

User Mistakes

The audience can also lead to issues with AI learning, especially when the model is improved collectively. Inputting incorrect information, attempting to distort facts, or compelling algorithms to perform unlawful actions – this aspect of ML depends on the user’s morality.

Therefore, if you are developing AI and training it openly, take care of the ethical aspect, even at the algorithmic level.

Several Cases of AI Errors and Their Consequences

Limitations, data selectivity, and the audience using AI algorithms are catalysts influencing the performance of tools based on this technology. As of 2024, there are numerous confirmations of how AI works with inaccurate data and the consequences it leads to. Let’s explore some examples of inefficient artificial intelligence:

- Healthcare: The accuracy of CAD system results depends on gender, ethnicity, and other characteristics. This is because the quantity of data varies for different population groups.

- Recruiting: NLP and its text recognition algorithms play a crucial role here. HR systems with AI, for instance, may prefer candidates who use specific words more frequently (not to be confused with data-driven filters for resume processing). This potentially screens out many qualified candidates.

- Digital Marketing: AI algorithms in search engines, for example, may sometimes rely on the user’s gender when displaying advertisements. Due to such bias, men might see offers for high-paying positions more frequently than women with similar education and skills.

- Generation of Digital Visual Content: Previous issues are relevant to creating images based on certain parameters. For instance, elderly men in prestigious positions may be more common in generated content than women. This underscores the imperfection of AI learning algorithms.

- Intelligent Monitoring Systems: Predicting violations using AI is an interesting concept that, in practice, turns into a problem. Algorithms use historical locations, events, and their participants to form forecasts. As a result, there is a bias against individuals racially distinct from the indigenous residents of regions.

Given the immaturity of AI technology, these shortcomings could be forgiven if there weren’t a risk that the situation would worsen over time and these nuances would become a problem. To mitigate such artifacts, efforts should be intensified to build more effective AI learning algorithms, focusing on ethics and user morality.

How to Avoid These Problems?

We have prepared a set of recommendations to help you eliminate potential negative consequences of using biased and intolerant AI in practice. With these, you’ll build an effective training strategy for your bot and optimize it for the specific needs of your business or a particular set of tools.

Investigate Bias

Bias has its sources, ranging from artistic literature to online resources, research, or archives used for AI training. Filter content that could potentially lead to the bot forming a misguided perception of information and establish periodic control over algorithm knowledge assimilation through testing systems.

Incorporate Adequate Training Strategies

Since AI is still in its early stages, improvements are being made transparently and openly. Study cases of other market players and consult with others to form effective and correct training algorithms for your bot. Use best practices to optimize data sources, verify them, and ethically process them.

Set Priorities

Use data sources directly or indirectly related to your work segment. Shape algorithms in a way that, through material absorption, your bot doesn’t highlight specific user categories in a more positive or negative context. Achieve a consensus between historical truth and contemporary moral and ethical norms.

Diversify Your Team

Involve multidisciplinary, diverse-gender, and multicultural experts in the work on AI training. Form a multifunctional team that organically trains it without bias, discrimination, etc. This will help to educate the bot more effectively and cultivate a neutral understanding of the physical and digital worlds.

Adjust the Model to Neutralize Established Biases

Bias in AI is evident, and actively working on it is crucial. Mix data, improve algorithms, conduct testing, and make adjustments to the “consciousness” of your AI to neutralize potential risks.

Actively Modernize the Training Model

AI is a child that requires your guidance during training. There are no definitive and 100% incorrect vectors here. ML is a field for experimentation where only the expert decides how advanced its algorithm can become. Therefore, improve learning principles, use advanced practices, and experiment!

Only in this way will you create a bot that precisely meets the needs of the business and is free from biases, ethical-moral problems, etc.

Final Word

Bias in AI, flawed judgments, and errors in data delivery are the results of unoptimized bot training. The consequences of implementing technology with such issues can have long-term impacts on businesses, such as reputation loss and revenue reduction.

As of 2024, the technology is still in the developmental stage, so the direction it takes depends on the actions of both developers and users, who, to some extent, contribute to the training of bots.

Since there are no clear and comprehensive laws regarding the use of AI yet, the responsibility for the future of the technology currently lies with bot owners and the audience using them. Therefore, let’s approach AI cautiously and, if possible, train it in a neutral manner by filtering information and adjusting its judgments.